Building an AI-Powered Content Summarization and Q&A App with LangChain and Hugging Face

Imagine having an application that could summarize any piece of text and then answer your questions about it—all with a single click. Sounds like science fiction? It’s very real, and today, I’ll walk you through building just such an AI-powered app using LangChain, Hugging Face models, and Streamlit. This project is accessible, beginner-friendly, and also powerful enough to show you the potential of chaining language models together. Ready to dive in? Let’s get started!

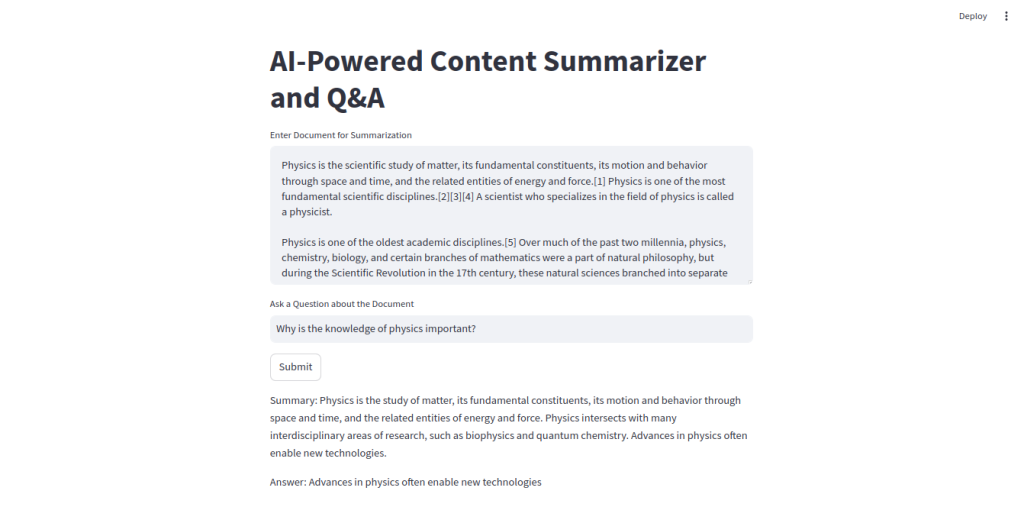

Our mission today is to build an AI-Powered Content Summarizer and Q&A Application. This app will take in any text document, generate a concise summary, and answer any question you have about it. We’ll use Hugging Face’s language models for summarization and question-answering tasks, LangChain for model chaining, and Streamlit for a user-friendly interface.

Here’s a look at what we’ll cover:

- Initial Setup

- Directory Structure and File Explanations

- Building the Core Components

- Creating the Streamlit Interface

- Running and Testing the Application

At the end of this tutorial, you’ll have a fully functional AI application, just like the one in the screenshot below.

We’re here to have fun and learn as we go, so let’s break this down step-by-step!

Step 1: Initial Setup

Before diving into the code, let’s ensure you have everything you need to get this project up and running. We’ll start by setting up a virtual environment and installing the required dependencies.

Creating a Virtual Environment

Using a virtual environment helps keep dependencies organized. To set one up:

python3 -m venv venv

source venv/bin/activate # On Windows, use `venv\Scripts\activate`Installing Dependencies

Once your virtual environment is activated, install the necessary libraries. We’ll be using LangChain, Hugging Face’s Transformers, Streamlit, and PyTorch:

pip install langchain transformers streamlit torch langchain-community

Let’s add these to a requirements.txt file for easy reference:

- langchain

- langchain-community

- transformers

- streamlit

- torch

With our environment ready, we can move on to setting up our project files.

Step 2: Directory Structure and Project Layout

Let’s break down our directory structure to keep things modular and organized. Here’s how our project layout will look:

langchain_app/

├── app.py # Main Streamlit app

├── requirements.txt # List of Python dependencies

├── config/ # Config files (optional)

│ └── config.yaml # Config file (optional)

├── src/ # Core application source code

│ ├── __init__.py # Initializes src as a module

│ ├── chains.py # LangChain pipeline setup and utility functions

│ ├── models.py # Hugging Face model initialization

│ └── utils.py # Helper functions

└── README.md # Project documentationEach folder has its place, and we’re keeping our code modular for easy understanding and maintenance. Let’s jump into the details of each file and build out our app.

Step 3: Building the Core Components

Our application has three main files in the src/ folder: models.py, chains.py, and utils.py. Here’s what each of them does.

1. Loading Models in models.py

In models.py, we load Hugging Face’s summarization and question-answering models. These are powerful pre-trained models that allow us to handle language tasks with ease.

Code Explanation:

from transformers import pipeline

# Load summarization model

summarizer = pipeline("summarization", model="facebook/bart-large-cnn")

# Load question-answering model

qa_pipeline = pipeline("question-answering", model="deepset/roberta-base-squad2")Here, we use Hugging Face’s pipeline function to create two models:

- summarizer: Generates summaries from longer documents.

- qa_pipeline: Answers questions based on provided text (in our case, the summary).

2. Chaining the Models in chains.py

Now, let’s build the functionality to chain our models together. Here, we define run_chain, a function that first summarizes the document and then answers questions based on the summary.

Code Explanation:

from .models import summarizer, qa_pipeline

def run_chain(document, question):

# Step 1: Summarize the document

summary_output = summarizer(document, max_length=130, min_length=30, do_sample=False)

summary = summary_output[0]["summary_text"]

# Step 2: Use the summary to answer the question

answer_output = qa_pipeline({"context": summary, "question": question})

answer = answer_output["answer"]

return {"summary": summary, "answer": answer}Here’s how run_chain works:

- Summarize: We pass the document to summarizer, which returns a summary.

- Question-Answering: Using the summary as context, we pass it along with the question to qa_pipeline, which returns an answer.

Step 4: Creating the Streamlit Interface in app.py

Now that the core components are ready, let’s create a user-friendly interface with Streamlit. app.py will act as our main application file, where users can input text and ask questions.

Code Explanation:

import streamlit as st

from src.chains import run_chain

st.title("AI-Powered Content Summarizer and Q&A")

document = st.text_area("Enter Document for Summarization", height=200)

question = st.text_input("Ask a Question about the Document")

if st.button("Submit"):

if document and question:

result = run_chain(document, question)

st.write("Summary:", result["summary"])

st.write("Answer:", result["answer"])

else:

st.warning("Please enter both a document and a question.")Here’s what each part of the interface does:

- Title: Sets the title of our app.

- Text Area: Provides a space for users to input a document to summarize.

- Text Input: Allows users to enter a question based on the document.

- Submit Button: When clicked, triggers run_chain if both inputs are provided, displaying the summary and answer.

Step 5: Running and Testing the Application

To test the app, run the following command:

streamlit run app.pyStreamlit will open a browser window, where you’ll see the app interface. Paste any text into the document box, type a related question, and click “Submit” to see the AI-powered summarization and Q&A in action.

Wrapping Up

Congratulations! You’ve built a fully functional AI-powered content summarization and question-answering application. This project isn’t just an exercise—it’s a real-world application of chaining language models to build something powerful and useful. You now have a deeper understanding of how LangChain and Hugging Face models can come together to create a cohesive app that provides unique insights from any text.

Feel free to customize, expand, or experiment with the models. This project is a starting point, and the possibilities are endless! With LangChain and Hugging Face in your toolkit, you’re ready to tackle more complex language processing projects and build even more sophisticated AI-powered applications. Happy coding!

Looking to build your next AI-powered application? Let’s make your ideas a reality. Reach out to us today and start your journey into AI innovation!