Building a Personal AI Assistant Like Jarvis: A Complete Technical Guide

Parijat Software Team

Voice AI Expert

If you grew up watching Iron Man, you probably dreamed of having your own Jarvis. An AI that just... gets you. One that listens when you need it, stays quiet when you don't, and actually does useful things instead of just answering trivia questions.

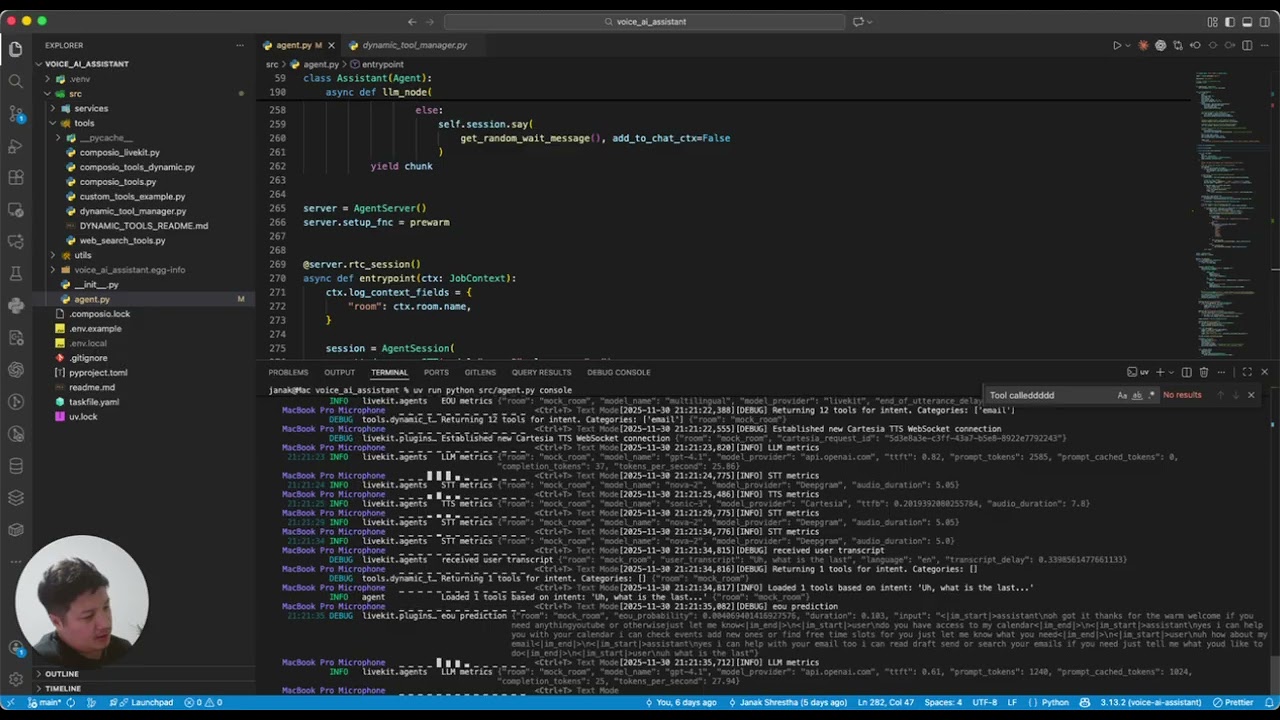

We recently built exactly that. A personal voice AI assistant that runs from the desktop, manages Gmail and Google Calendar through natural conversation, searches the web for real-time information, and responds to wake words like the sci-fi assistants we all imagined.

This project started as a prototype to validate features for Marfa, a personal AI assistant you can access over a phone call. But it quickly became something we use every day. We're open-sourcing the code so you can build your own. Or reach out if you want us to build something custom for your use case.

Here's how it all works under the hood.

See it in action:

The Tech Stack

Building a production-quality voice AI assistant requires getting several pieces right. Here's the stack we chose and why:

Voice AI Framework: LiveKit Agents

LiveKit is the backbone of this project. It's an open-source framework for building real-time voice and video AI agents, the same infrastructure that powers ChatGPT's Advanced Voice Mode.

What makes LiveKit valuable is how it handles the hard parts of voice AI: turn detection, interruption handling, streaming responses, and WebRTC connectivity. This lets you focus on assistant logic instead of audio engineering.

Speech-to-Text: Deepgram Nova-2

For transcription, we're using Deepgram's Nova-2 model. The latency is crucial for conversational AI. Anything over 300ms and interactions start feeling sluggish. Nova-2 delivers accurate transcription fast enough for real-time conversation.

Large Language Models: Multi-Provider Fallback

The assistant uses a fallback adapter pattern with multiple LLM providers:

- OpenAI GPT-4.1: Primary model

- Anthropic Claude Haiku: Fast fallback with caching

- Google Gemini 2.5 Flash: Additional redundancy

This architecture provides reliability (if one provider has issues, others take over) and lets us experiment with different models for different interaction types.

Text-to-Speech: Cartesia Sonic-3

For voice output, Cartesia's Sonic-3 provides natural-sounding speech with low latency. We've also tested ElevenLabs for projects requiring more voice customization.

Real-Time Web Search: Perplexity API

This is what makes the assistant actually useful day-to-day. When you ask "what's the weather?" or "what happened in the news?", the assistant queries Perplexity's Search API for real-time information. No stale training data, actual current information.

Gmail & Calendar Integration: Composio

Composio handles OAuth and API integration with Google services. Through Composio, the assistant can:

- Fetch and search emails

- Send emails and manage drafts

- Create, update, and delete calendar events

- Find free time slots for scheduling

- Read today's agenda

Key Features That Make It Production-Ready

Wake Word Activation

The assistant doesn't continuously process everything it hears. It stays idle until it detects its wake word, configurable to any name you want.

wakeupKeywords = [

self.assistant_name,

"hey " + self.assistant_name.lower(),

"hello " + self.assistant_name.lower(),

]

After ~10 seconds of silence, the assistant enters an "away" state. It's still listening for the wake word, but won't process background audio. This saves compute costs and prevents false activations.

Voice-Controlled Mute

Privacy matters. Say "mute" and the assistant goes completely silent. It won't respond to anything except "unmute" or the wake word. Essential for calls, meetings, or whenever you need it to stay quiet.

Dynamic Tool Loading

This is where the architecture gets interesting. Instead of loading every capability at startup (which bloats the context window and slows responses), the assistant detects user intent and loads only relevant tools on-demand.

# Dynamically load tools based on detected intent

if user_message:

intent_tools = self.tool_manager.get_tools_for_intent(user_message)

Ask about your calendar? Calendar tools load. Ask about email? Gmail tools load. Tools unused for 3 conversation turns automatically unload. It's like garbage collection for AI capabilities.

The intent detection uses keyword matching against categories:

CALENDAR_KEYWORDS = [

"calendar", "schedule", "meeting", "appointment",

"event", "reschedule", "free time", "availability"

]

EMAIL_KEYWORDS = [

"email", "mail", "inbox", "message", "send",

"reply", "draft", "unread"

]

Pre-Action Acknowledgment

When executing time-consuming operations (creating events, sending emails, searching), the assistant acknowledges immediately with natural phrases like "Let me handle that" or "Working on it" before the action completes. Small detail, but it makes interactions feel responsive.

Architecture Overview

voice_ai_assistant/

├── src/

│ ├── agent.py # Core assistant logic

│ ├── services/

│ │ └── perplexity_service.py # Web search integration

│ ├── tools/

│ │ ├── web_search_tools.py # Perplexity wrapper

│ │ ├── composio_tools_dynamic.py # Gmail/Calendar tools

│ │ └── dynamic_tool_manager.py # Intent-based loading

│ └── utils/

│ └── instructions.py # System prompts

├── .env.example

└── pyproject.toml

The Assistant class extends LiveKit's Agent base class, overriding key methods:

stt_node: Intercepts transcription to handle wake word detection and mute statellm_node: Manages dynamic tool loading based on conversation intenton_user_turn_completed: Cleans up unused tools after each turn

Getting Started

Prerequisites

- Python 3.10+

- LiveKit account (cloud or self-hosted)

- API keys: Deepgram, OpenAI/Anthropic/Google, Cartesia or ElevenLabs, Perplexity

- Composio account with Gmail and Google Calendar connected

Installation

git clone https://github.com/MarfaAI/voice_ai_assistant.git

cd voice_ai_assistant

uv sync

Configure .env.local based on .env.example:

LIVEKIT_URL=your_livekit_url

LIVEKIT_API_KEY=your_api_key

LIVEKIT_API_SECRET=your_api_secret

COMPOSIO_API_KEY=your_composio_key

PERPLEXITY_API_KEY=your_perplexity_key

Running

python -m src.agent dev

You can find more details on project setup in the repo's readme file.

Example Interactions

Calendar:

- "What's on my calendar today?"

- "Schedule a meeting with the team tomorrow at 2pm"

- "When am I free this week?"

- "Cancel my 3 o'clock"

Email:

- "Any new emails?"

- "Read my latest email from Sarah"

- "Send an email to the team about the project update"

- "Do I have anything from legal?"

Real-Time Information:

- "What's the weather?"

- "Latest news about AI"

- "Current Bitcoin price"

- "Who won the Lakers game?"

Extending to Phone Calls

This implementation runs as a console app, but adding phone support is straightforward with LiveKit's telephony stack.

The path:

- Provision a phone number (Twilio, Vonage, etc.)

- Configure SIP trunking with LiveKit

- Route incoming calls to your agent

Your personal Jarvis becomes reachable from any phone. We haven't included telephony in the open-source version, but we've implemented this for client projects. Reach out if you need phone-enabled voice AI.

Want Something Like This For Your Business?

This open-source project demonstrates what's possible with modern voice AI infrastructure. But every business has different needs:

- Custom integrations: CRM, ERP, internal tools, databases

- Industry-specific knowledge: Healthcare, legal, finance, real estate

- Multi-language support: Agents that work across languages

- Phone/SMS channels: Customer-facing voice bots

- Compliance requirements: HIPAA, SOC2, data residency

We build production voice AI systems. From prototype to deployment, we handle the complexity so you get a solution that actually works.

Check out Marfa to try a personal AI assistant over the phone, or get in touch to discuss your project.